Hardware-enforced GPU partitioning

GPU workloads are segmented using MIG for NVIDIA and SR-IOV for AMD to create hardware-enforced GPU partitions.

GPU workloads are segmented using MIG for NVIDIA and SR-IOV for AMD to create hardware-enforced GPU partitions.

Storage is isolated at the volume and namespace level with encryption and policy-based access controls.

Networking uses BGP EVPN/VXLAN overlays, InfiniBand partitioning, and SR-IOV virtual NICs to deliver high-performance virtual fabrics.

All segmentation policies are centrally enforced by the Ori Control Plane, ensuring consistent governance across public cloud, partner clouds, and customer-owned clusters.

GPUs are shared between customers with namespace separation.

GPUs to be dedicated to customers under a shared control plane.

GPUs and the control plane are dedicated to a customer.

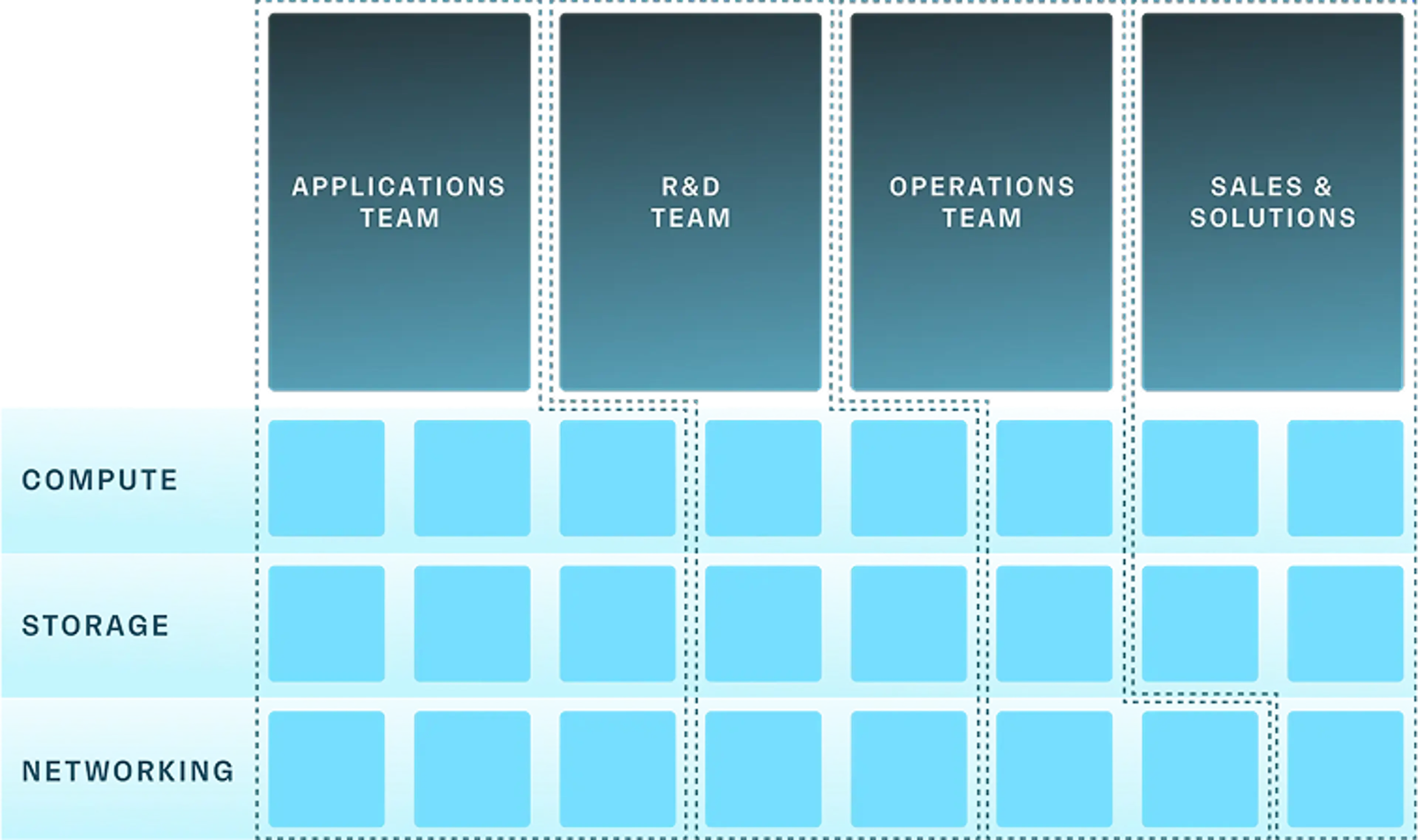

Empower every team to build fast, without compromising on security.

Hardware-accelerated segmentation across computer, storage, and networking under a single control plane for strict governance.

Multiple tenancy modes help you deploy both public and private AI clouds, and cover a large addressable market.

Integrated within the platform with bare-metal level performance. No off-platform isolation and no performance tax.

Ori offers both full-stack exclusivity and resource sharing to implement AI clouds at scale.

Host thousands of customers on shared infrastructure.

Strict multi-tenancy keeps your customer data, models and apps fully private.