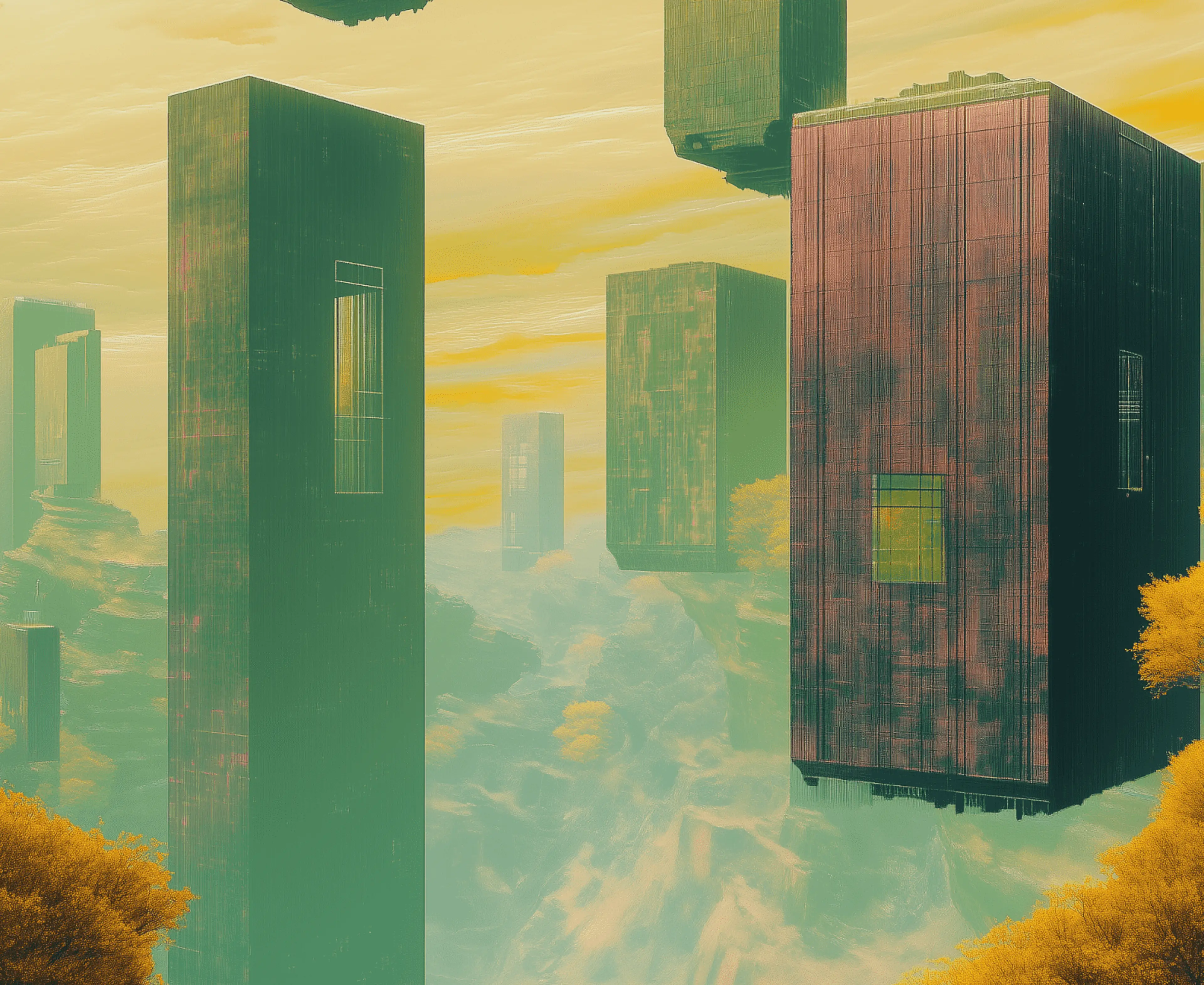

Serverless inference on Ori lets you run AI models with full abstraction, just send requests and get results. With automatic scaling, hardware-agnostic execution, and pay-per-token pricing, it ensures performance and cost-efficiency at any scale. Perfect for moving seamlessly from prototype to production, Ori makes inference simple, fast, and reliable.

Inference infrastructure

powered by Ori

powered by Ori

What makes Ori Inference unique?

- Auto-scaling & multi-region

Scale automatically with demand (including scale-to-zero) across multiple regions.

Effortless deployment

Effortless deploymentBuilt-in authentication, DNS management and native integration with Registry and Fine tuning, so you can deploy effortlessly.

- Serverless & dedicated GPU

Supports both serverless for token-based usage and dedicated GPUs for strict performance or security needs.

- Runs everywhere

Leverage the Ori cloud with our infrastructure or build your own inference platform with our software.