The operating system for AI infrastructure

The Ori AI Fabric platform is a complete, end-to-end software stack for building, operating, and monetizing high-performance AI clouds. The same platform that powers our production Ori Cloud can be licensed to build your own AI-centric, GPU compute cloud.

From our bare-metal-first architecture to our integrated MLOps services, Ori provides the foundation for telcos, governments, and enterprises to launch their own sovereign, private, or public AI cloud services with lower risk and faster time-to-market.

A GPU-centric cloud operating system

Global reach, sovereign control

One control plane for geographically distributed clusters with the ability to enforce data residency/policy.

GPU-aware scheduler

Eliminate idleness and run more with less with intelligent placement.

More AI, fewer GPUs

Fractional sharing (MIG) + topology-aware bin-packing to maximize utilization.

Zero-touch infra

Auto-provision, monitor, heal; handle failures and lifecycle without manual operations.

Open ecosystem

Native support across GPUs, storage, networking eliminates supply chain risk and avoids lock-in.

Dependency-free architecture

Lightweight, homegrown stack scales easily without dependencies on third-party frameworks, ensuring roadmap freedom and lower TCO.

Infrastructure-Agnostic AI Infra Control Plane

AI-native developer control

Full API/CLI/SDK coverage to ship faster and manage everything programmatically.

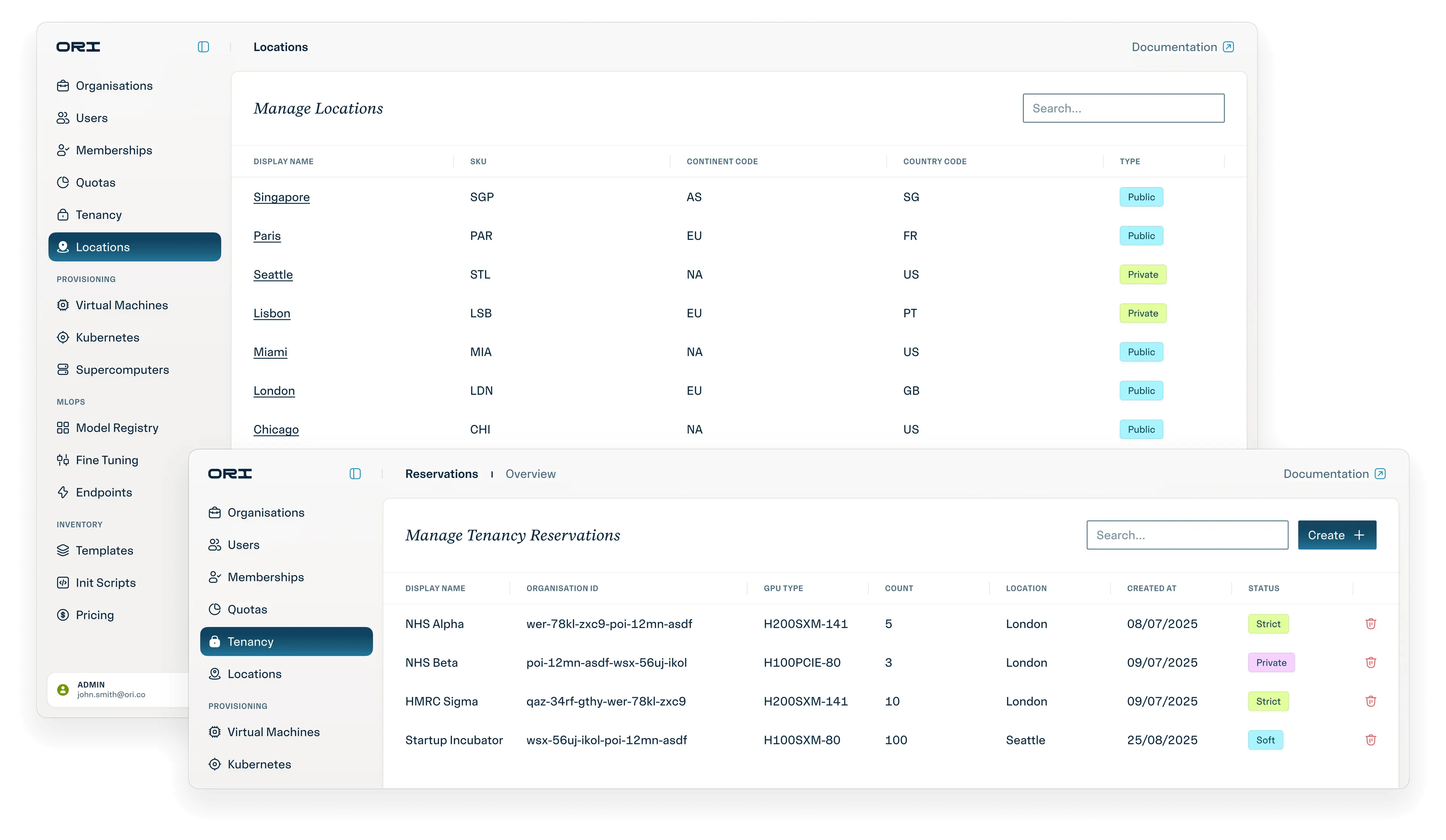

Flexible tenancy models

Provide a full spectrum of secure isolation - from flexible shared resources to fully private, hardware-isolated environments.

Hardware-enforced isolation

Bare-metal security without the virtualization tax; protect performance and ROI.

Your business model, integrated

Plug into any billing system; real-time usage telemetry for chargebacks, subs, pay-as-you-go.

Service catalog control

Define compute SKUs, pricing and model/software availability per tenant.

Cloud native AI services

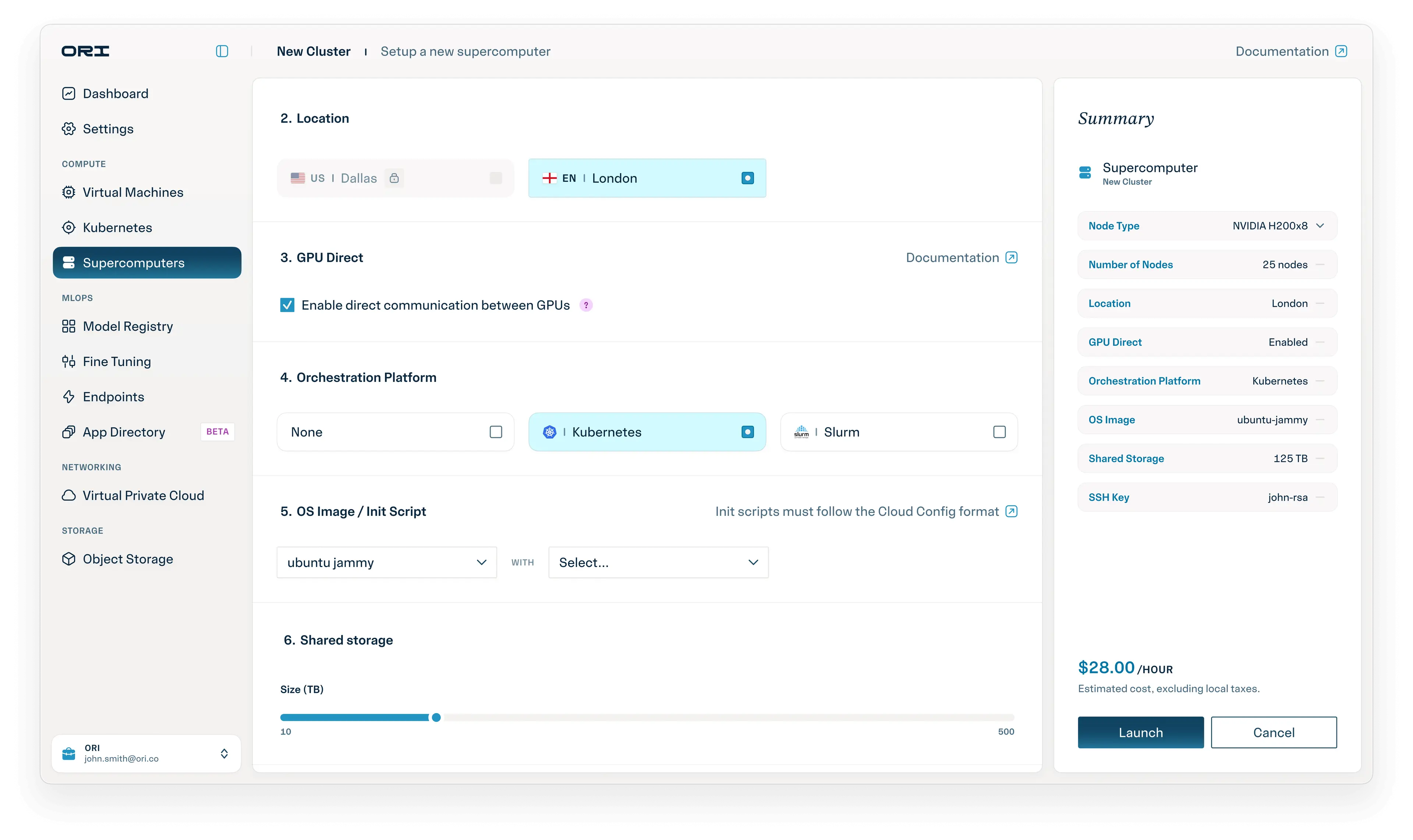

Supercomputing, on demand

Spin up multi-node GPU clusters with high-speed interconnects in minutes.

AI compute, on demand

Provision NVIDIA and specialized accelerators as pre-configured instances.

Serverless Kubernetes for AI

Elastic, resilient containers without infrastructure management.

High-throughput data

Integrated with first-class object stores & parallel file systems to keep models fed.

MLOps AI services

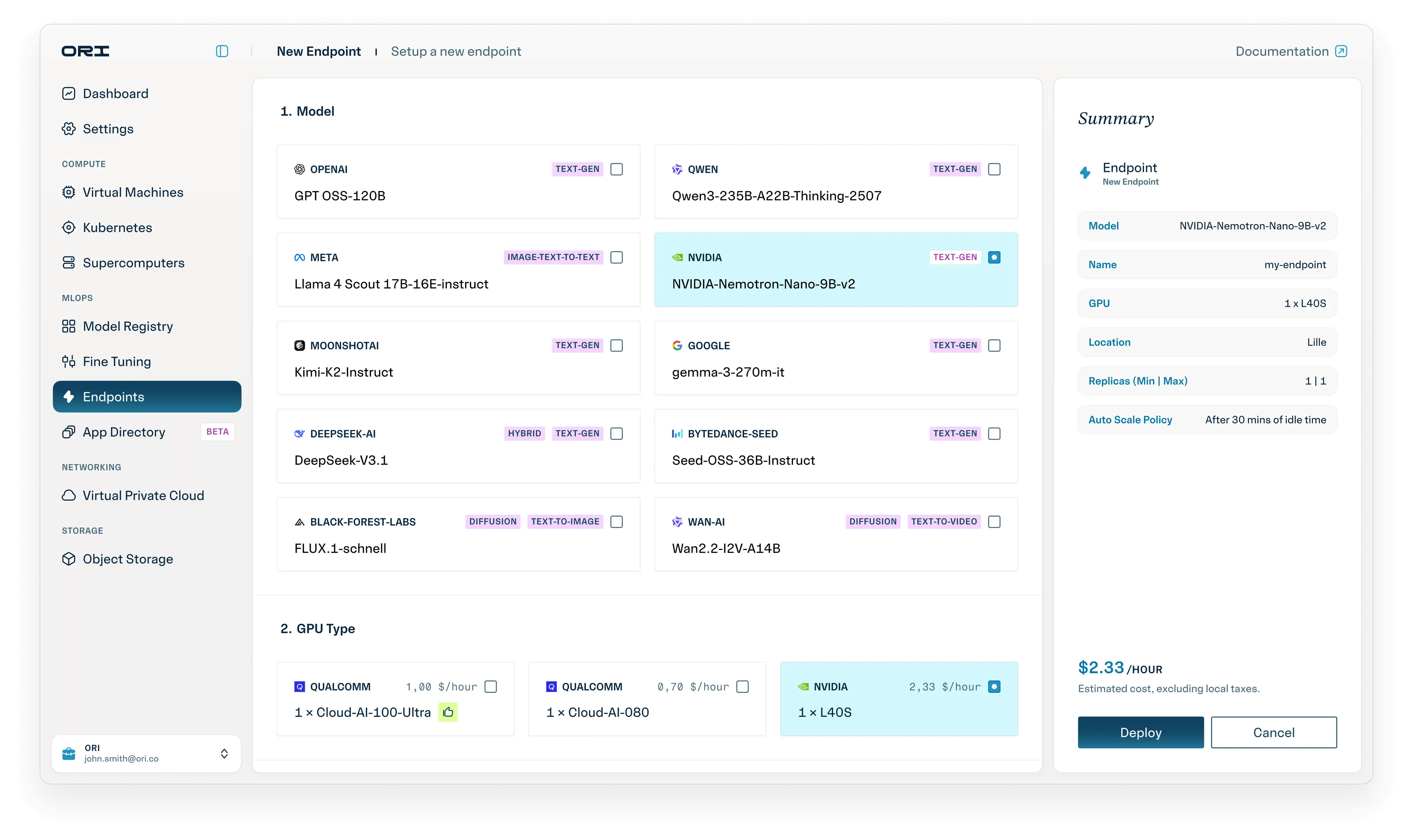

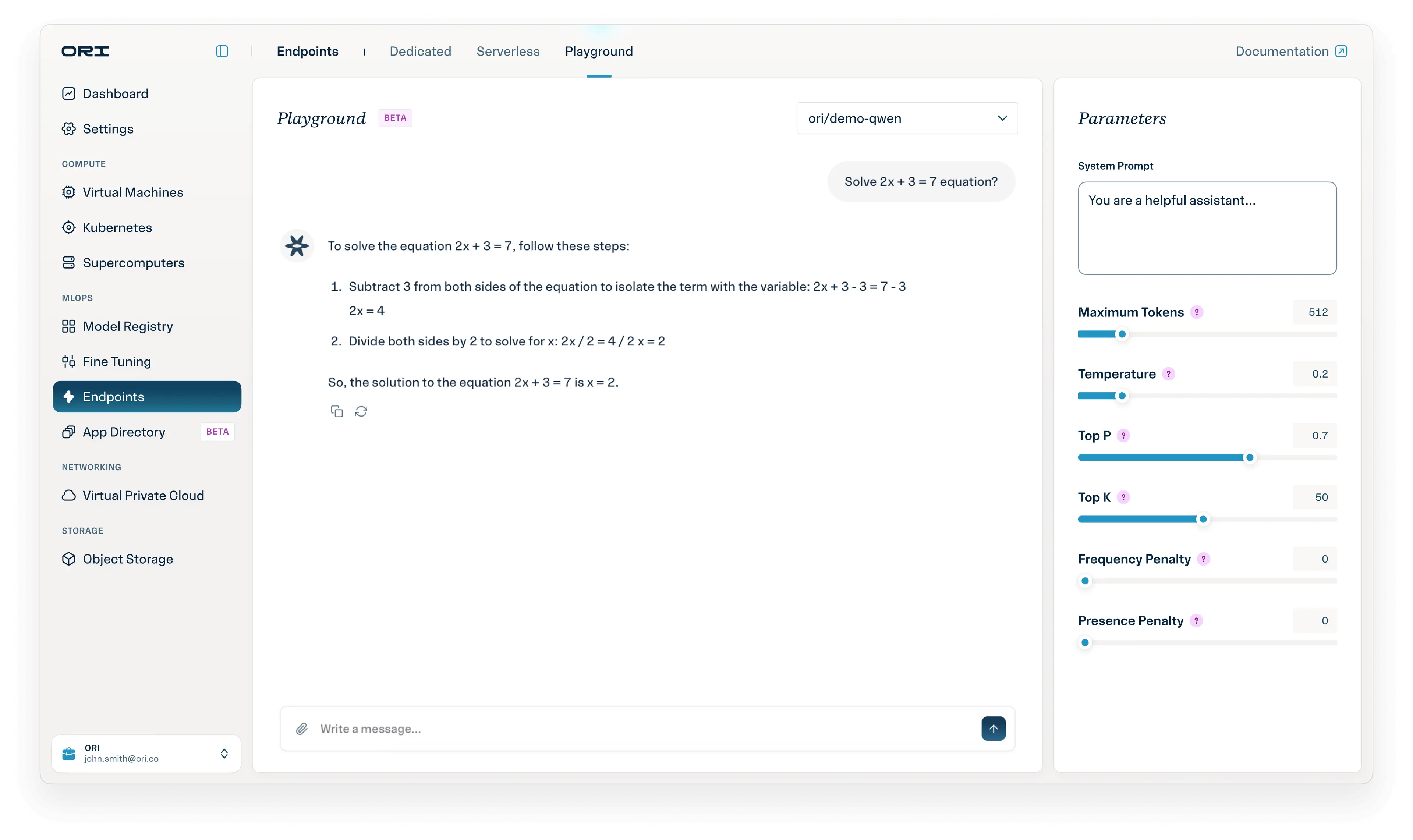

Production-grade inference

Serverless endpoints to dedicated hardware for any model.

Location-aware model registry

Store, version and distribute models to the right regions to achieve the best latency.

One-click fine-tuning

Abstract scripts/commands; turn OSS models into domain experts.

Scale-up training runway

From one GPU to thousands, backed by supercomputers and high-performance storage.

Powering AI with

strategic partners

strategic partners

Our ecosystem of trusted partners ensures the highest performance, reliability, and scalability in AI compute.

Why our customers love Ori

Ori’s ease of use is so good that as the non-technical co-founder I’m able to manage GPU usage and understand billing clearly. Additionally, I’d give Ori 5-stars for affordability.